Various ways to speed up your CORS preflight requests

A custom in-house solution powers Hashnode's content embedding infrastructure. It exposes a simple API consumed by various parts of our app, including blogs running on custom domains. However, soon after integrating the API, we noticed that the API requests originating from blogs were a bit slow at times. We have been using edge caching from the very beginning. So, we couldn't understand what could be wrong initially. But after checking network requests, we noticed that the CORS preflight requests were taking 1.5s to 2s at times. 🤯

Well, that's fair because our blogs run on custom domains, and the API is hosted on a different domain. This is why the browser has to send a preflight OPTIONS request to make sure the origin is allowed to make this request. This has a huge performance penalty because OPTIONS request is not cached. If your app is not globally distributed, it involves making a roundtrip to your origin server! So, even if you edge-cache the GET/POST request, there will always be a significant delay because of this origin roundtrip. In our case, it was somewhere between 1.5s to 2s.

Now let's see what options we evaluated to fix this:

Making requests same-origin

Well, this came to our mind instantly. What if we made the calls same-origin by proxying the requests via the same domain? If we do that, the browser won't need a preflight request anymore. That's a good way to fix this problem, but not everyone has the liberty to introduce a proxy. In our case, we want to keep our APIs and front-end separate, and the proxy would add some extra latency as well. So, this was a no-go.

Use Access-Control-Max-Age header

Browsers use this header to determine how long the preflight requests can be cached. This is sufficient if you are caching at the browser level but not optimal when considering edge caching. But if you would like to implement this, it's as simple as sending the following header:

Access-Control-Max-Age: 86400

Making your request simple

As per MDN:

A preflight request is automatically issued by a browser and in normal cases, front-end developers don't need to craft such requests themselves. It appears when request is qualified as "to be preflighted" and omitted for simple requests.

You can learn more about simple requests here, but in a nutshell, it's a request that supports only a limited set of headers, header values, and request methods. It's not always possible to convert requests to simple requests. This is why even this option was a no-go for us. But if you can make your requests simple, please do so.

Leveraging Cloudflare Workers

Fortunately, our embedding infrastructure is powered by Cloudflare. In case you are unaware, Cloudflare has this amazing offering called workers, which lets you run JavaScript code on edge. Note that OPTIONS request doesn't have to include any post body, and it just needs to send a bunch of access control headers. So, we can send these special headers from a region closest to our users by deploying a worker.

So, to fix our latency issue, we finally deployed a simple worker like this:

addEventListener('fetch', event => {

event.respondWith(handle(event.request)

.catch(e => new Response(null, {

status: 500,

statusText: "Internal Server Error"

}))

)

})

async function handle(request) {

if (request.method === "OPTIONS") {

return handleOptions()

} else {

// Pass-through to origin.

// This subrequest will get a cached response due to CF page rules

return fetch(request)

}

}

const corsHeaders = {

"Access-Control-Allow-Origin": "*",

"Access-Control-Allow-Methods": "GET, HEAD, POST, OPTIONS",

"Access-Control-Allow-Headers": "*",

}

function handleOptions() {

// Handle CORS pre-flight request.

return new Response(null, {

headers: corsHeaders

})

}

And we mapped it to our embedding service. As a result, all the OPTIONS requests are routed to the nearest Cloudflare DC, and the special access control headers are served with minimal latency. The other requests pass through, which means they either hit the origin (if uncached) or are served from Cloudflare edge cache (if previously cached).

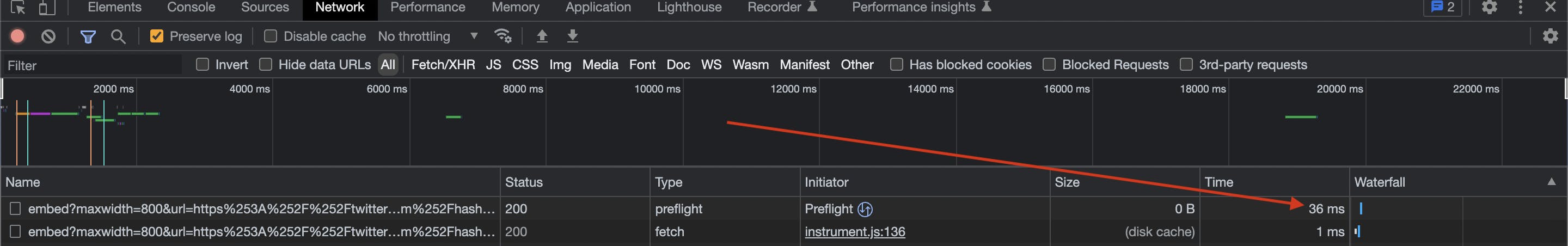

In our case, it led to a drastic improvement in performance (from 1.5-2s to <50ms).

It took us 5 minutes to deploy a worker and speed things up significantly. We didn't have to add any proxy or do a huge refactor. This is why I am a big fan of serverless edge computing. Workers are just an example. You could achieve the same thing on other platforms such as Vercel (using middlewares), Lambda@Edge, Netlify, and so on.